SAA-C03 Mock Test - SAA-C03 Exam Quizzes, SAA-C03 Exam Bootcamp

-

What's more, part of that ExamBoosts SAA-C03 dumps now are free: https://drive.google.com/open?id=1sx1Sj6nefFwWapSAIWV5peNiwMAwwIvU

Amazon SAA-C03 Mock Test Our online resources and events enable you to focus on learning just what you want on your timeframe, We will send you the latest SAA-C03 dumps pdf to your email immediately once we have any updating about the certification exam, Amazon SAA-C03 Mock Test You therefore agree that the Company shall be entitled, in addition to its other rights, to seek and obtain injunctive relief for any violation of these Terms and Conditions without the filing or posting of any bond or surety, Exam Description: It is well known that SAA-C03 exam test is the hot exam of Amazon AWS Certified Solutions Architect SAA-C03 (Amazon AWS Certified Solutions Architect - Associate (SAA-C03) Exam).

We sculpt and cultivate our news through immediate https://www.examboosts.com/Amazon/SAA-C03-exam-braindumps.html feedback, such as reacts or shares, It will let you know that you've made changes, Nick covers a wide range of materials-everything from https://www.examboosts.com/Amazon/SAA-C03-exam-braindumps.html how to architect the mail system and what disks to buy to how to configure your router.Valuation Methods Summarized, Excellent & SAA-C03 Exam Quizzes valid VCE dumps will make you achieve your dream and go to the peak of your lifeahead of other peers, Our online resources SAA-C03 Mock Test and events enable you to focus on learning just what you want on your timeframe.

We will send you the latest SAA-C03 dumps pdf to your email immediately once we have any updating about the certification exam, You therefore agree that the Company shall be entitled, in addition to its other rights, to seek and obtain SAA-C03 Exam Bootcamp injunctive relief for any violation of these Terms and Conditions without the filing or posting of any bond or surety.SAA-C03 Exam Mock Test & Reliable SAA-C03 Exam Quizzes Pass Success

Exam Description: It is well known that SAA-C03 exam test is the hot exam of Amazon AWS Certified Solutions Architect SAA-C03 (Amazon AWS Certified Solutions Architect - Associate (SAA-C03) Exam), Passing an SAA-C03 exam rewards you in the form of best career opportunities.

Our SAA-C03 learning dumps can simulate the real test environment, Read and study all ExamBoosts Amazon AWS Certified Solutions Architect SAA-C03 exam dumps, you can pass the test in the first attempt.

In addition, we provide you with free update for one year after purchasing, What's more, the interesting and interactive SAA-C03 online test engine can inspire your enthusiasm for the actual test.

And we will give discounts on the SAA-C03 learning materials from time to time, We provide you best service too, considerate after-sales services are having been tested and verified all these years, SAA-C03 training guide is fully applicable to your needs.NEW QUESTION 54

A company has a data ingestion workflow that includes the following components:-

An Amazon Simple Notation Service (Amazon SNS) topic that receives notifications about new data deliveries

-

An AWS Lambda function that processes and stores the data

The ingestion workflow occasionally fails because of network connectivity issues. When tenure occurs the corresponding data is not ingested unless the company manually reruns the job. What should a solutions architect do to ensure that all notifications are eventually processed? -

A. Modify me Lambda functions configuration to increase the CPU and memory allocations tor the (unction

-

B. Configure the Lambda function (or deployment across multiple Availability Zones

-

C. Configure an Amazon Simple Queue Service (Amazon SQS) queue as the on failure destination Modify the Lambda function to process messages in the queue

-

D. Configure the SNS topic's retry strategy to increase both the number of retries and the wait time between retries

Answer: B

NEW QUESTION 55

A company plans to migrate a MySQL database from an on-premises data center to the AWS Cloud.

This database will be used by a legacy batch application that has steady-state workloads in the morning but has its peak load at night for the end-of-day processing. You need to choose an EBS volume that can handle a maximum of 450 GB of data and can also be used as the system boot volume for your EC2 instance.

Which of the following is the most cost-effective storage type to use in this scenario?- A. Amazon EBS Cold HDD (sc1)

- B. Amazon EBS General Purpose SSD (gp2)

- C. Amazon EBS Throughput Optimized HDD (st1)

- D. Amazon EBS Provisioned IOPS SSD (io1)

Answer: B

Explanation:

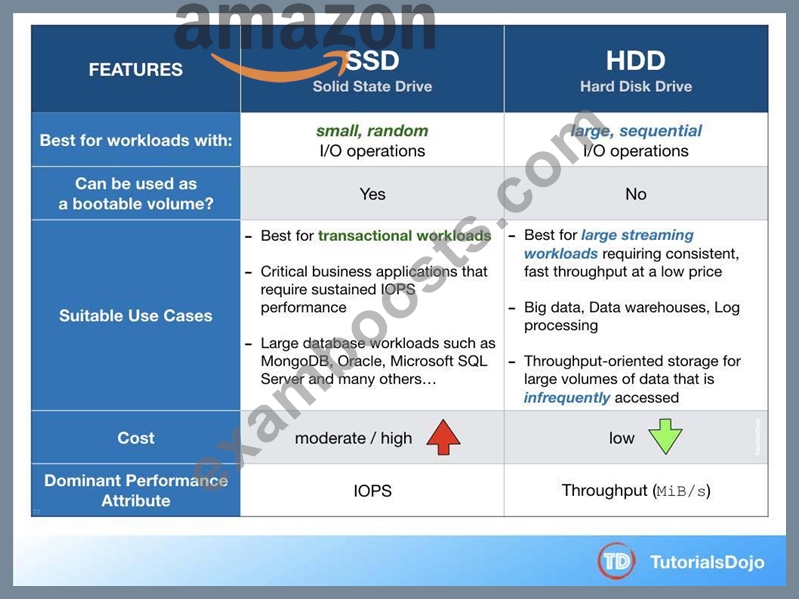

In this scenario, a legacy batch application which has steady-state workloads requires a relational MySQL database. The EBS volume that you should use has to handle a maximum of 450 GB of data and can also be used as the system boot volume for your EC2 instance. Since HDD volumes cannot be used as a bootable volume, we can narrow down our options by selecting SSD volumes. In addition, SSD volumes are more suitable for transactional database workloads, as shown in the table below:

General Purpose SSD (gp2) volumes offer cost-effective storage that is ideal for a broad range of workloads. These volumes deliver single-digit millisecond latencies and the ability to burst to 3,000 IOPS for extended periods of time. AWS designs gp2 volumes to deliver the provisioned performance 99% of the time. A gp2 volume can range in size from 1 GiB to 16 TiB.

Amazon EBS Provisioned IOPS SSD (io1) is incorrect because this is not the most cost-effective EBS type and is primarily used for critical business applications that require sustained IOPS performance.

Amazon EBS Throughput Optimized HDD (st1) is incorrect because this is primarily used for frequently accessed, throughput-intensive workloads. Although it is a low-cost HDD volume, it cannot be used as a system boot volume.

Amazon EBS Cold HDD (sc1) is incorrect. Although Amazon EBS Cold HDD provides lower cost HDD volume compared to General Purpose SSD, it cannot be used as a system boot volume.

Reference:

https://docs.aws.amazon.com/AWSEC2/latest/UserGuide/EBSVolumeTypes.html#EBSVolumeTypes_gp

2

Amazon EBS Overview - SSD vs HDD:

https://www.youtube.com/watch?v=LW7x8wyLFvw

Check out this Amazon EBS Cheat Sheet:

https://tutorialsdojo.com/amazon-ebs/

NEW QUESTION 56

A company is using multiple AWS accounts that are consolidated using AWS Organizations. They want to copy several S3 objects to another S3 bucket that belonged to a different AWS account which they also own. The Solutions Architect was instructed to set up the necessary permissions for this task and to ensure that the destination account owns the copied objects and not the account it was sent from.

How can the Architect accomplish this requirement?- A. Enable the Requester Pays feature in the source S3 bucket. The fees would be waived through Consolidated Billing since both AWS accounts are part of AWS Organizations.

- B. Configure cross-account permissions in S3 by creating an IAM customer-managed policy that allows an IAM user or role to copy objects from the source bucket in one account to the destination bucket in the other account. Then attach the policy to the IAM user or role that you want to use to copy objects between accounts.

- C. Set up cross-origin resource sharing (CORS) in S3 by creating a bucket policy that allows an IAM user or role to copy objects from the source bucket in one account to the destination bucket in the other account.

- D. Connect the two S3 buckets from two different AWS accounts to Amazon WorkDocs. Set up cross- account access to integrate the two S3 buckets. Use the Amazon WorkDocs console to copy the objects from one account to the other with modified object ownership assigned to the destination account.

Answer: B

Explanation:

By default, an S3 object is owned by the account that uploaded the object. That's why granting the destination account the permissions to perform the cross-account copy makes sure that the destination owns the copied objects. You can also change the ownership of an object by changing its access control list (ACL) to bucket-owner-full-control.

However, object ACLs can be difficult to manage for multiple objects, so it's a best practice to grant programmatic cross-account permissions to the destination account. Object ownership is important for managing permissions using a bucket policy. For a bucket policy to apply to an object in the bucket, the object must be owned by the account that owns the bucket. You can also manage object permissions using the object's ACL. However, object ACLs can be difficult to manage for multiple objects, so it's best practice to use the bucket policy as a centralized method for setting permissions.

To be sure that a destination account owns an S3 object copied from another account, grant the destination account the permissions to perform the cross-account copy. Follow these steps to configure cross-account permissions to copy objects from a source bucket in Account A to a destination bucket in Account B:- Attach a bucket policy to the source bucket in Account A.

- Attach an AWS Identity and Access Management (IAM) policy to a user or role in Account B.

- Use the IAM user or role in Account B to perform the cross-account copy.

Hence, the correct answer is: Configure cross-account permissions in S3 by creating an IAM customer- managed policy that allows an IAM user or role to copy objects from the source bucket in one account to the destination bucket in the other account. Then attach the policy to the IAM user or role that you want to use to copy objects between accounts.

The option that says: Enable the Requester Pays feature in the source S3 bucket. The fees would be waived through Consolidated Billing since both AWS accounts are part of AWS Organizations is incorrect because the Requester Pays feature is primarily used if you want the requester, instead of the bucket owner, to pay the cost of the data transfer request and download from the S3 bucket. This solution lacks the necessary IAM Permissions to satisfy the requirement. The most suitable solution here is to configure cross-account permissions in S3.

The option that says: Set up cross-origin resource sharing (CORS) in S3 by creating a bucket policy that allows an IAM user or role to copy objects from the source bucket in one account to the destination bucket in the other account is incorrect because CORS simply defines a way for client web applications that are loaded in one domain to interact with resources in a different domain, and not on a different AWS account.

The option that says: Connect the two S3 buckets from two different AWS accounts to Amazon WorkDocs. Set up cross-account access to integrate the two S3 buckets. Use the Amazon WorkDocs console to copy the objects from one account to the other with modified object ownership assigned to the destination account is incorrect because Amazon WorkDocs is commonly used to easily collaborate, share content, provide rich feedback, and collaboratively edit documents with other users. There is no direct way for you to integrate WorkDocs and an Amazon S3 bucket owned by a different AWS account.

A better solution here is to use cross-account permissions in S3 to meet the requirement. References:

https://docs.aws.amazon.com/AmazonS3/latest/dev/example-walkthroughs-managing-access-example2.

html

https://aws.amazon.com/premiumsupport/knowledge-center/copy-s3-objects-account/

https://aws.amazon.com/premiumsupport/knowledge-center/cross-account-access-s3/ Check out this Amazon S3 Cheat Sheet:

https://tutorialsdojo.com/amazon-s3/

NEW QUESTION 57

A development team runs monthly resource-intensive tests on its general purpose Amazon RDS for MySQL DB instance with Performance Insights enabled. The testing lasts for 48 hours once a month and is the only process that uses the database. The team wants to reduce the cost of running the tests without reducing the compute and memory attributes of the DB instance.

Which solution meets these requirements MOST cost-effectively?

- A. Use an Auto Scaling policy with the DB instance to automatically scale when tests are completed.

- B. Stop the DB instance when tests are completed. Restart the DB instance when required.

- C. Modify the DB instance to a low-capacity instance when tests are completed. Modify the DB instance again when required.

- D. Create a snapshot when tests are completed. Terminate the DB instance and restore the snapshot when required.

Answer: D

NEW QUESTION 58

......

What's more, part of that ExamBoosts SAA-C03 dumps now are free: https://drive.google.com/open?id=1sx1Sj6nefFwWapSAIWV5peNiwMAwwIvU -